-

Notifications

You must be signed in to change notification settings - Fork 11

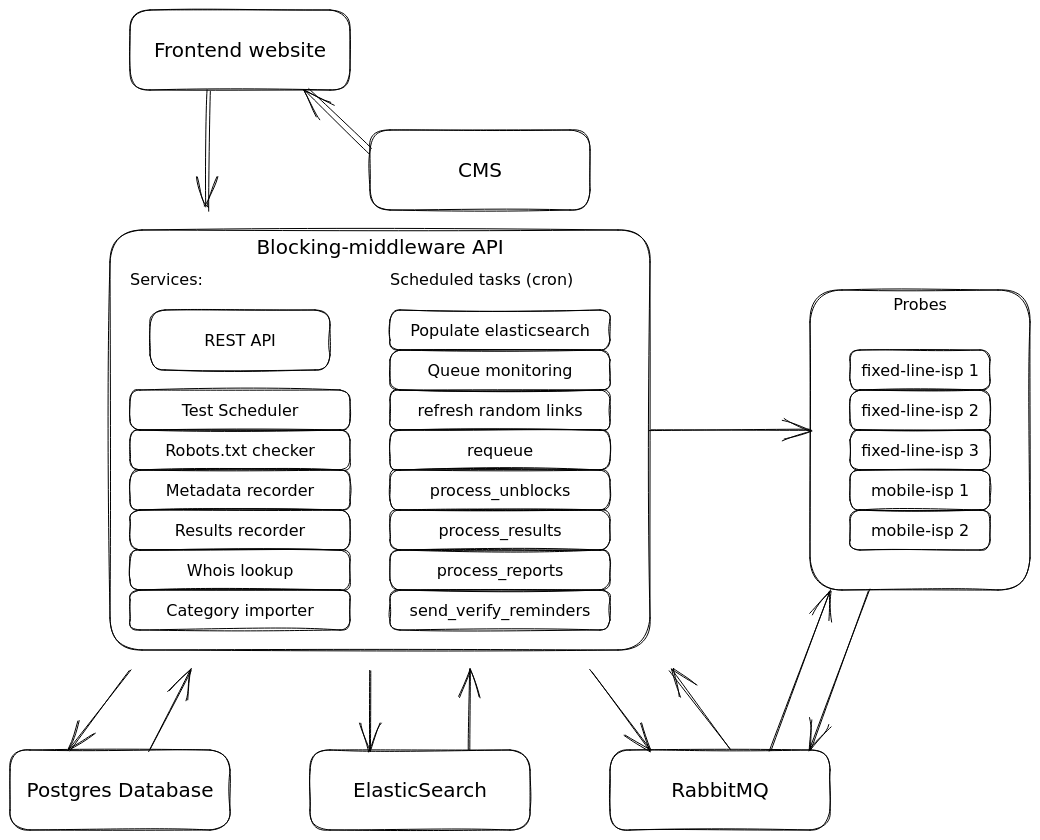

components

Python daemon that periodically re-runs batches of URLs through the probes to gather fresh results. Batch and test configuration is modifiable through a web UI.

Before a URL is submitted to the probes, the site's robots.txt is fetched and compared with the probe's user-agent.

If robots.txt disallows the probe's user-agent, the URL database entry is updated and the test request is dropped.

If the probe is allowed by the target site's robots.txt, the request is forwarded on to the RabbitMQ topic for

dispersal to the probes.

In parallel with the probe tests, the API server retrieves a copy of the target URL webpage. If title and description metatags are present in the HTML page, this information is added to the database entry for that URL to facilitate full-text searching.

Results from probe tests are returned to the system through a RabbitMQ results queue. This service listens on the

results queue and inserts the result records to the database.

In parallel with the probe tests, the API server will attempt to run a whois lookup on the target domain and record expiry information if available.

In parallel with the probe tests, this service queries the Categorify web API to fetch category information for the target site. This is added to the URL database and the full text search database.

Gathers the latest changes from the URLs table(s) in the database and updates the elasticsearch full-text index.

Periodically writes the current RabbitMQ queue sizes to allow the test scheduler to provide feedback on stalled probes.

The API provides a random links route for prompting visitors to review blocked sites for accuracy and "correctness". To save database read capacity, these links are pre-selected and stored in redis.

The original system for re-processing old URL submissions. This runs through a subset of previous test URL submissions in last-checked order, sending them again to probes for retesting.

When the blocked status for a site changes (from blocked -> ok), this tasks updates related database records and can optionally send email updates to "watchers" of the target site.

Monitors incoming changes to URL status (blocked/OK) and sends any ISP reports that were waiting for fresh results.

If sites have been flagged by users for unwanted content (shock site, virus), this task removes them from the full-text search index.

Users who have not yet completed the email verification process will be sent a limited number of reminders by this process.

Primary datastore for test URLs, results, latest status, ISPs and ISP unblock requests.

Full-text search index for searching sites by category or keyword.

Queue broker responsible for queueing and dispatching test cases to probes.