This is most relavant project in the current era and the future, as this project aims to solve one of the most critical problems we face today...

It's 2024 and we can no longer trust the content we see on the internet. With the rise of AI-generated content, it's hard to distinguish between real and fake content. This project aims to solve this problem by detecting AI-generated text/content using a custom AI model trained on a large dataset.

This is an AI text/content detection program that makes use of a custom model trained using GPT-4, DebertaModel, DebertaModelv3, SlimPajama and other models, dataset & techiniques, to detect & score contents provided as input. It is fine-tuned on a large dataset to produce most accurate results.

Full Walkthrough >5mins

- CUDA-enabled GPU

- Python 3.10.6

Check and note down the CUDA information:

nvcc --version # or

nvidia-smiDownload and install CUDA driver toolkit if not installed already. (This Project also works with CPU, but it's recommended to use a CUDA-enabled GPU for better performance)

Download and install Python 3.10.6 if not installed already. (I recommend using this version for compatibility with the torch dependencies)

Clone the main repository & move into the directory:

git clone https://github.com/jesvijonathan/AI-Generated-Text-Detection

cd AI-Generated-Text-DetectionDownload the model Weights (~9GB) from here & extract them to the weights directory:

# wget https://www.mediafire.com/file/7n4b2e1geeuzu69/weights.zip/file -O weights.zip

unzip weights.zip -d ./weightsCreate and activate the virtual environment:

python -m venv env

source env/bin/activateInstall CUDA/GPU version of Python torch dependencies (check & replace link-version below with your CUDA version, e.g: v12.1 = 121):

python -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121Install project requirements:

pip install -r packages.txtRun the app.py file, (make sure to adjust the params in the config.py file):

python app.pyclone the frontend repository & move into the directory:

cd ../

git clone https://github.com/jesvijonathan/Snitch-GPT-Frontend

cd Snitch-GPT-FrontendInstall npm project dependencies and build the app:

npm install

npm run buildRun the frontend application:

npm run devOpen the application in your web browser:

http://localhost:5173 # for App

http://localhost:5000 # for Legacy App

http://localhost:5000/api # for API- Use the application via API or web interface.

- Refer to the frontend repository for more details.

- Both the frontend and backend applications should be running simultaneously.

- Configure the parameters in the

config.pyfile for better results. - Ensure you have the recommended hardware and software requirements:

- GPU Mode (recommended, significantly better performance),

- CUDA enabled GPU,

- 4GB+ VRAM (with all 6 models loaded, 12GB+ recommended),

- CPU Mode (not recommended, although you can use it in MIX mode alongside GPU),

- 4+ Core CPU (8+ Core CPU recommended),

- 2.5GHz+ Clock Speed,

- 16GB RAM (32GB+ recommended)

- Min 40GB Storage (SSD recommended, faster swap & loading time),

- Python 3.10.6

- GPU Mode (recommended, significantly better performance),

- Lack of memory or VRAM may cause poor performance or might crash during execution.

- Lack of storage may cause the program to crash due insufficient space for model weights or swap memory.

- Switch to sequential mode & disable models to reduce memory usage if you face memory issues.

- This project works best with text with more than 250 characters & is trained on lengthy texts & using bert/DebertaModel along with 3M GPT-4 Data.

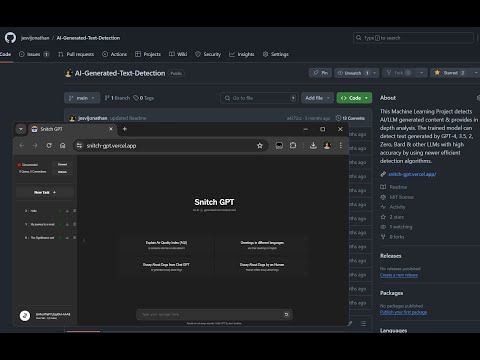

- Use this project along with the frontend interface intended to use with this project, which has visualization & more | Snitch-GPT

- Adjust the params in the config.py file best, as per need (You will have to modify the default params).

- The model is trained on a large custom dataset for the most accurate results. Results may not always be inaccurate, use at own risk.

- I know most of it is speghetti code rn, but it works. Will refactor soon. 0_0

- Detect AI-generated text/content.

- Score the content based on multiple parameters & the probability of being AI-generated.

- Model trained on a broader/large dataset for the most accurate results.

- Use of new multiple models & techniques to improve the accuracy of the model.

- Interactive Web app interface to interact with the application.

- API availability to integrate the application with other services.

- Minimal & easy to use, can save research time.

- Customizable parameters to adjust the model for better results.

- Maximum efficiency by using max hardware resources for significant performance boosts & faster results.

- Supports one shot detection & other checks.

- ~300ms execution time for optimal cases.

- many more...

-

Legacy App Endpoint:

- http://localhost:5000/ - Home Page

-

WebSocket Endpoints:

- ws://localhost:5000/socket.io/ - Connect to the WebSocket

- ws://localhost:5000/socket.io/ - Disconnect from the WebSocket

- ws://localhost:5000/socket.io/ - Get the values from the WebSocket

-

Http (API) Endpoints:

- http://localhost:5000/api/ - API Home Page

- http://localhost:5000/api/token - Get the token from the API

- http://localhost:5000/api/remove - Remove the token from the API

- http://localhost:5000/api/job - Get/Post Job requests

- GET: http://localhost:5000/api/job?user_id=ef933904&text=hello%20world%20jesvi

- POST: http://localhost:5000/api/job {"user_id": "ef933904", "text": "hello world jesvi"}

-

Sntich GPT App Endpoints:

- Please refer Snitch-GPT Docs