An easily-deployable, single instance version of Snowplow that serves three use cases:

- Gives a Snowplow consumer (e.g. an analyst / data team / marketing team) a way to quickly understand what Snowplow "does" i.e. what you put in at one end and take out of the other

- Gives developers new to Snowplow an easy way to start with Snowplow and understand how the different pieces fit together

- Gives people running Snowplow a quick way to debug tracker updates (because they can)

- Data is tracked and processed in real time

- Added Iglu Server to allow for custom schemas to be uploaded

- Data is validated during processing

- This is done using both our standard Iglu schemas and any custom ones that you have loaded into the Iglu Server

- Data is loaded into Opensearch

- Can be queried directly or through a Opensearch dashboard

- Good and bad events are in distinct indexes

- Create UI to indicate what is happening with each of the different subsystems (collector, enrich etc.), so as to provide developers a very indepth way of understanding how the different Snowplow subsystems work with one another

Note: Until version 0.15.0, Snowplow data was loaded to Elasticsearch 6.x in the Mini. However, a licensing change in Elasticsearch prevented us from upgrading it to more recent versions. To make sure we stay up to date with important security fixes, we've decided to replace Elasticsearch with Opensearch. Also, Kibana is replaced with Opensearch Dashboards. However, you may still encounter elasticsearch and kibana terms in the project.

Cloud setup guides for AWS and GCP, in addition to a usage guide, are available at our docs website.

To run snowplow-mini on your local machine you will need to install the following pre-requisites:

Then you should be able stand up a snowplow-mini locally by then running:

$ git clone https://github.com/snowplow/snowplow-mini.git

Cloning into 'snowplow-mini'...

$ cd snowplow-mini

$ vagrant up

Bringing machine 'default' up with 'virtualbox' provider...This will take a little time to complete, so grab yourself a ☕️ and come back in a few minutes. See the troubleshooting section below if you encounter any errors.

Once complete, a Snowplow Collector will be running on http://localhost:8080 and the Snowplow Mini UI will be on http://localhost:2000/home.

To log in to the Snowplow Mini UI for the first time, follow the First time usage section within the documentation for the version of Snowplow Mini you have just created.

Once you are finished with Snowplow Mini locally, it is wise to stop the virtual machine:

$ vagrant halt

==> default: Attempting graceful shutdown of VM...If you wish to tidy up all the resources, including deleting the virtual machine:

$ vagrant destroy

default: Are you sure you want to destroy the 'default' VM? [y/N] y

==> default: Destroying VM and associated drives...Some advice on how to handle certain errors if you're trying to build this locally with Vagrant.

Your Vagrant version is probably outdated. Use Vagrant 2.0.0+.

This is caused by trying to use NFS. Comment the relevant lines in Vagrantfile.

Most likely this will happen on TASK [sp_mini_5_build_ui : Install npm packages based on package.json.] but see also: https://discourse.snowplowanalytics.com/t/snowplow-mini-local-vagrant/2930.

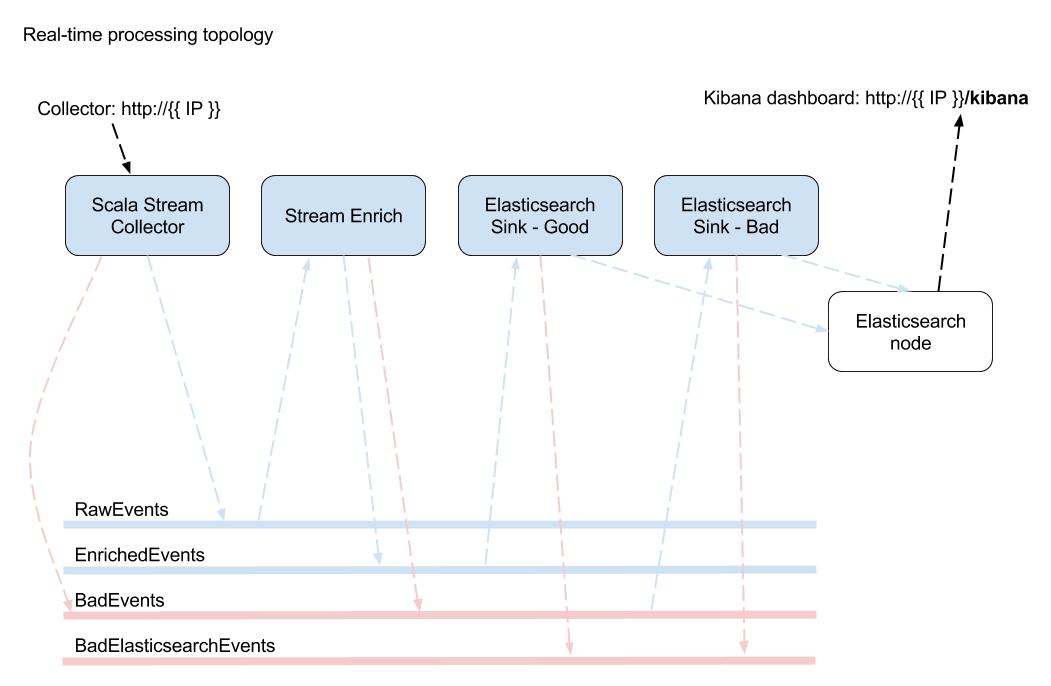

Snowplow Mini runs several distinct applications on the same box which are all linked by NSQ topics. In a production deployment each instance could be an Autoscaling Group and each NSQ topic would be a distinct Kinesis Stream.

- Stream Collector:

- Starts server listening on

http://< sp mini public ip>/which events can be sent to. - Sends "good" events to the

RawEventsNSQ topic - Sends "bad" events to the

BadEventsNSQ topic

- Starts server listening on

- Enrich:

- Reads events in from the

RawEventsNSQ topic - Sends events which passed the enrichment process to the

EnrichedEventsNSQ topic - Sends events which failed the enrichment process to the

BadEventsNSQ topic

- Reads events in from the

- Elasticsearch Sink Good:

- Reads events from the

EnrichedEventsNSQ topic - Sends those events to the

goodElasticsearch index - On failure to insert, writes errors to

BadElasticsearchEventsNSQ topic

- Reads events from the

- Elasticsearch Sink Bad:

- Reads events from the

BadEventsNSQ topic - Sends those events to the

badElasticsearch index - On failure to insert, writes errors to

BadElasticsearchEventsNSQ topic

- Reads events from the

These events can then be viewed in Kibana at http://< sp mini public ip>/kibana.

Snowplow Mini is copyright 2016-present Snowplow Analytics Ltd.

Licensed under the Snowplow Limited Use License Agreement. (If you are uncertain how it applies to your use case, check our answers to frequently asked questions.)

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.