-

✓ Configuration from kubeconfig files (

KUBECONFIGenvironment variable or$HOME/.kube) -

✓ Switch contexts interactively

-

✓ Authentication support (bearer token, basic auth, private key / cert, OAuth, OpenID Connect, Amazon EKS, Google Kubernetes Engine, Digital Ocean)

-

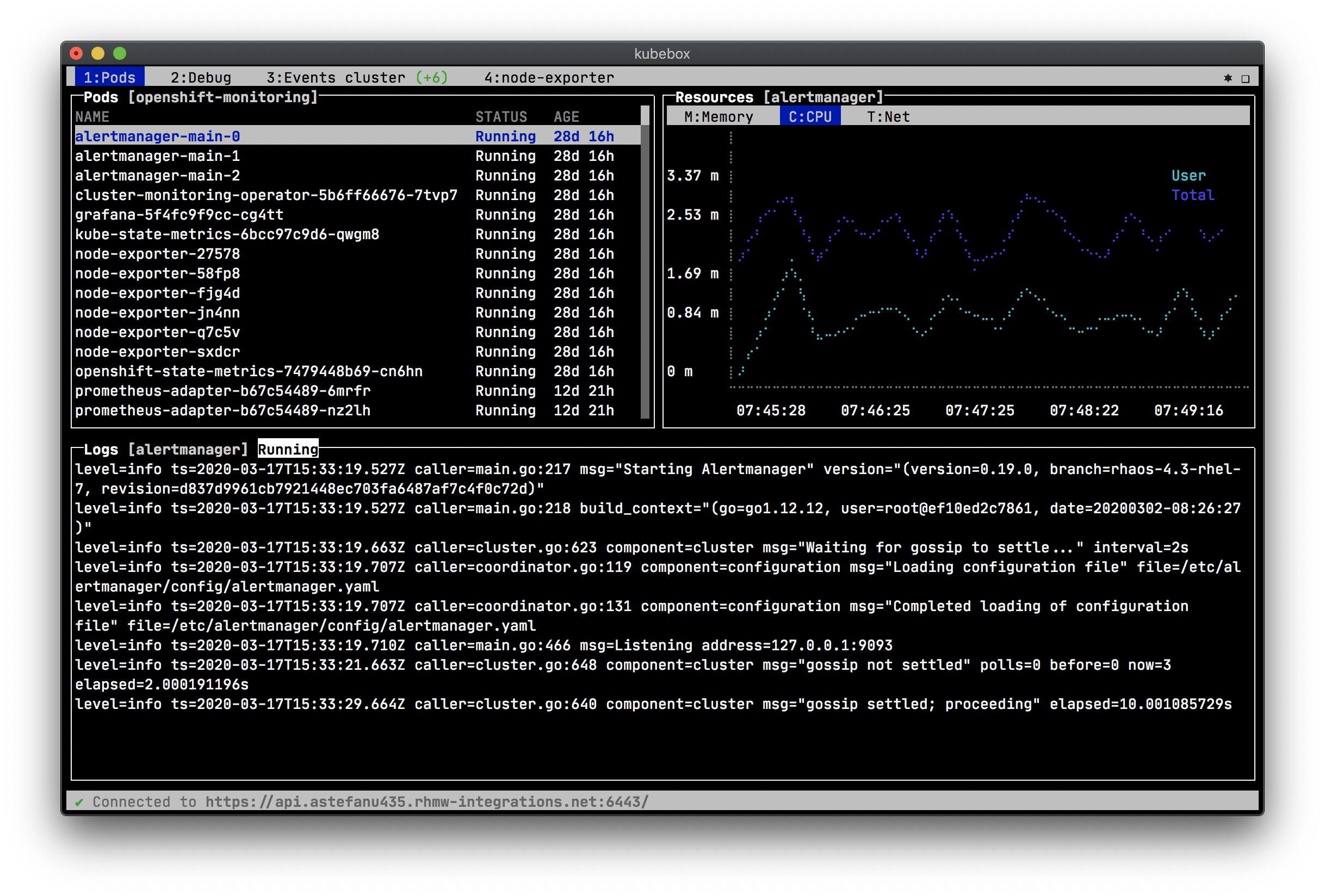

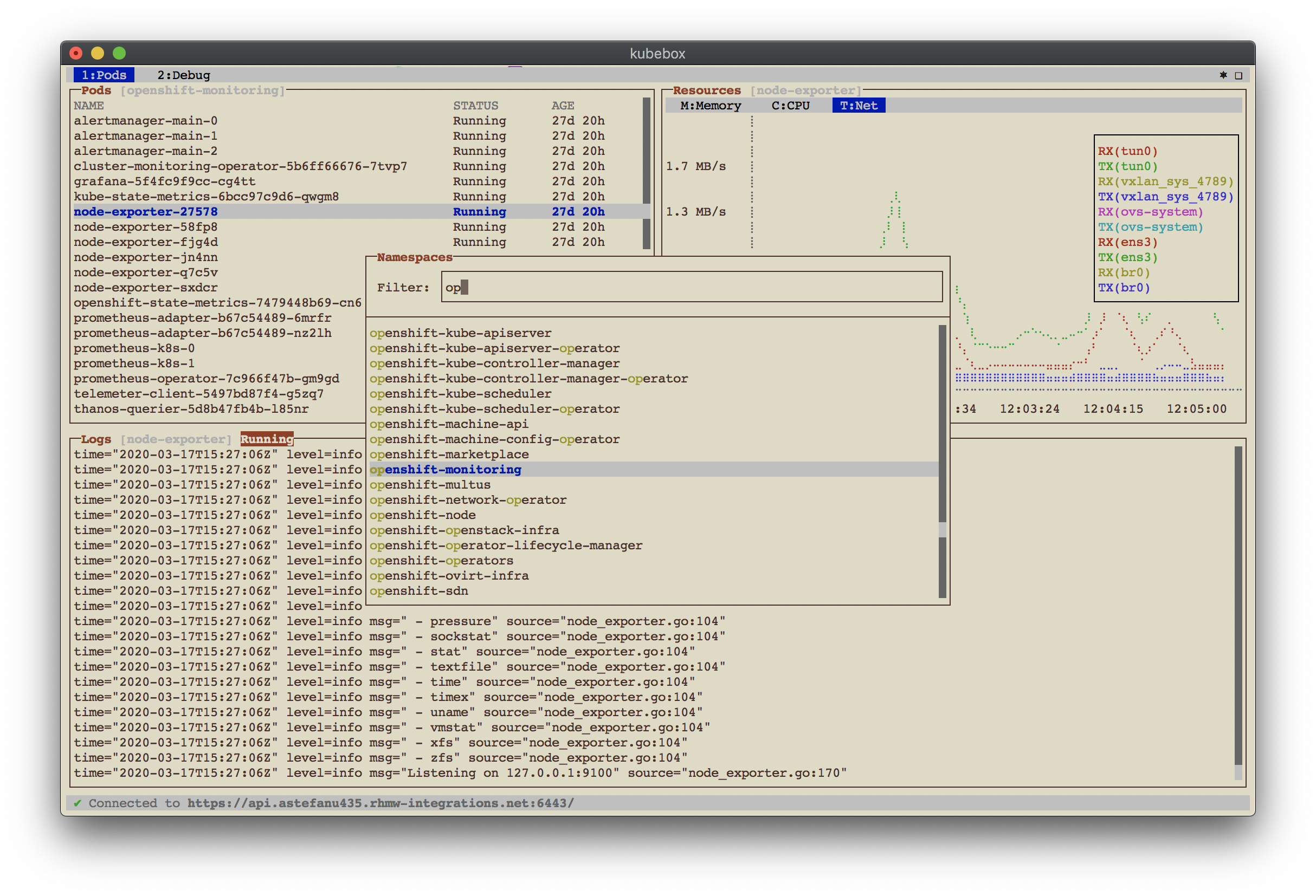

✓ Namespace selection and pods list watching

-

✓ Container log scrolling / watching

-

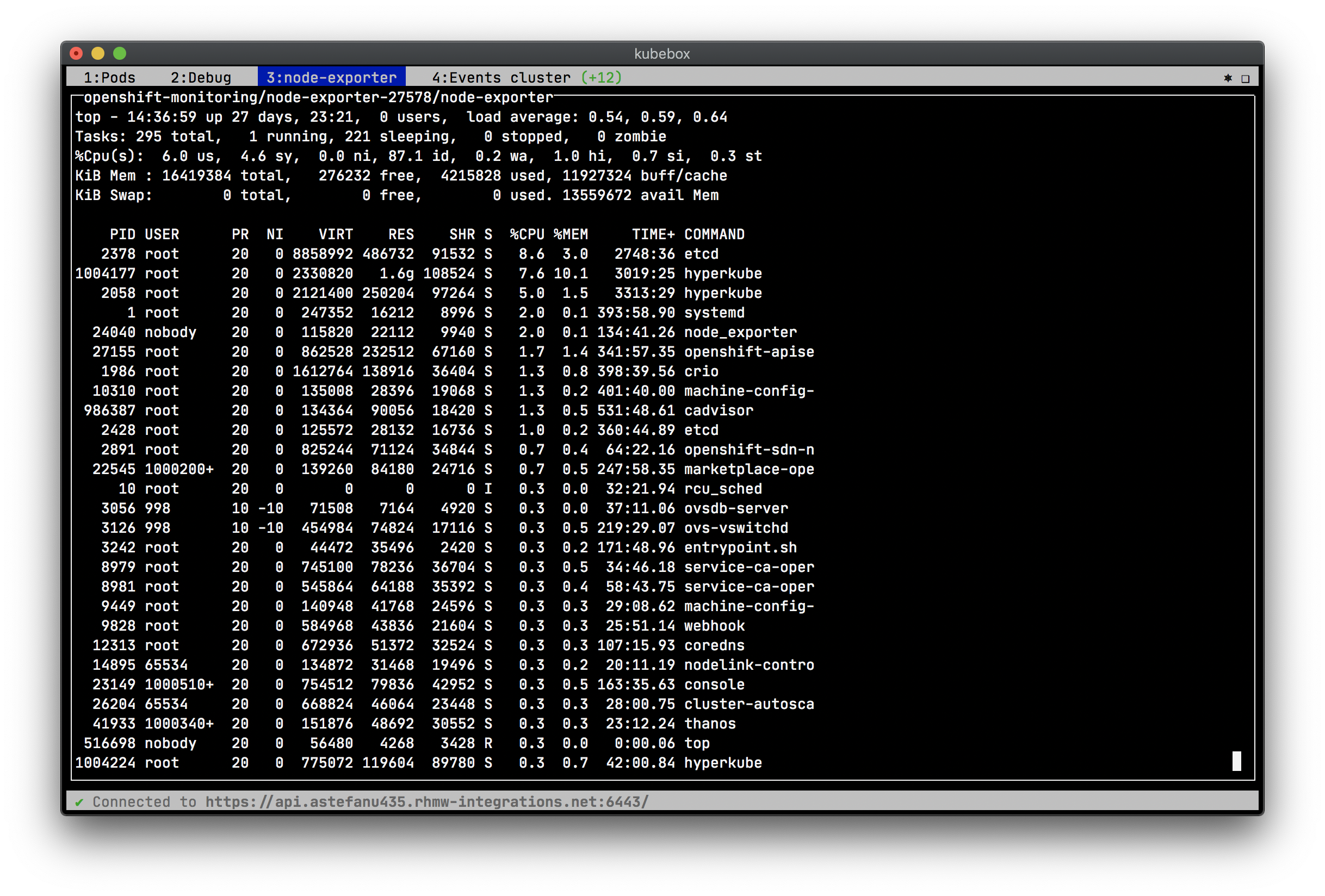

✓ Container resources usage (memory, CPU, network, file system charts) [1]

-

✓ Container remote exec terminal

-

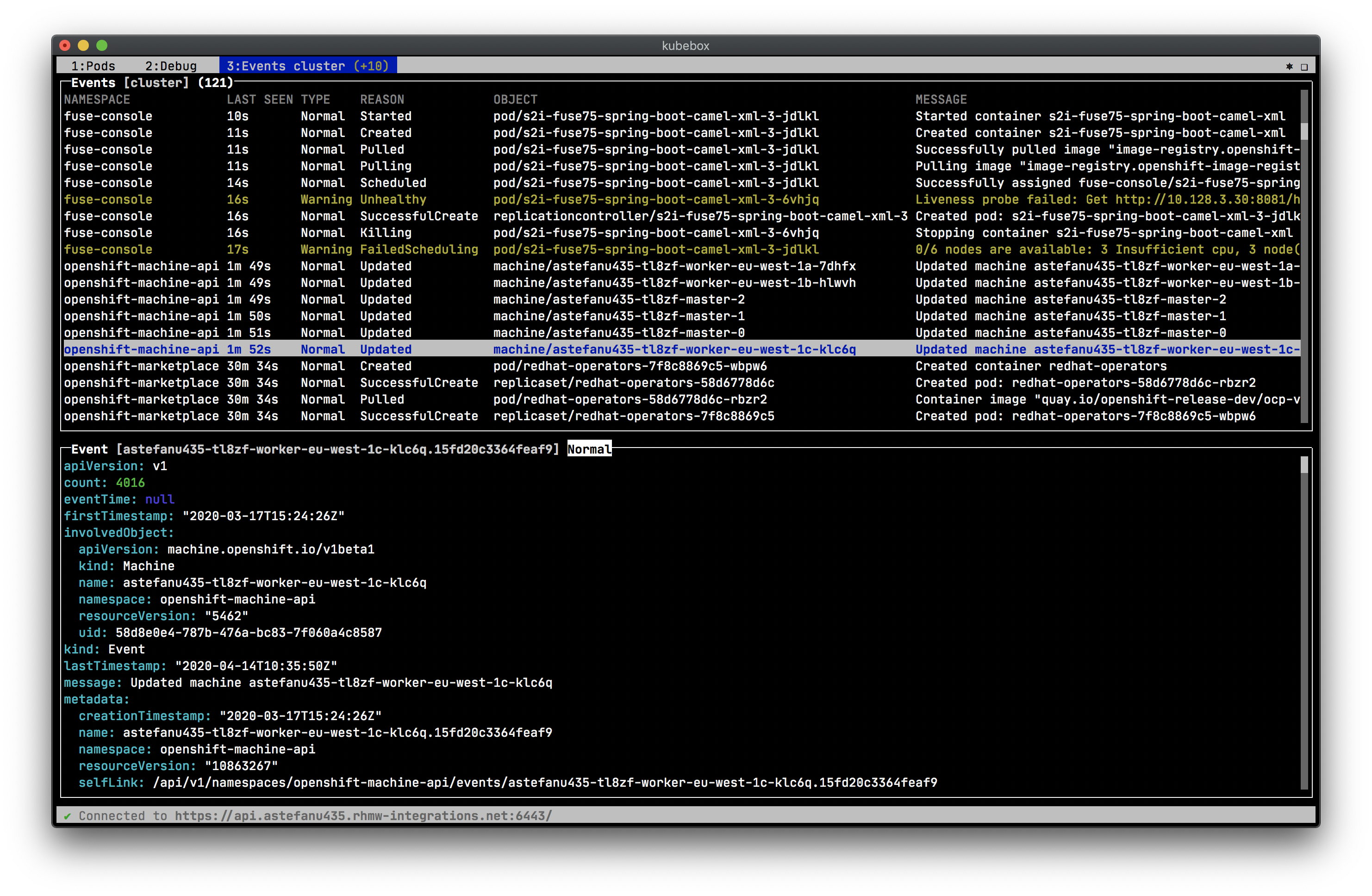

✓ Cluster, namespace, pod events

-

❏ Object configuration editor and CRUD operations

-

❏ Cluster and nodes views / monitoring

See the Screenshots section for some examples, and follow

The following alternatives are available for you to use Kubebox, depending on your preferences and constraints:

Download the Kubebox standalone executable for your OS:

# Linux (x86_64)

$ curl -Lo kubebox https://github.com/astefanutti/kubebox/releases/download/v0.10.0/kubebox-linux && chmod +x kubebox

# Linux (ARMv7)

$ curl -Lo kubebox https://github.com/astefanutti/kubebox/releases/download/v0.10.0/kubebox-linux-arm && chmod +x kubebox

# OSX

$ curl -Lo kubebox https://github.com/astefanutti/kubebox/releases/download/v0.10.0/kubebox-macos && chmod +x kubebox

# Windows

$ curl -Lo kubebox.exe https://github.com/astefanutti/kubebox/releases/download/v0.10.0/kubebox-windows.exeThen run:

$ ./kubeboxKubebox can be served from a service hosted in your Kubernetes cluster. Terminal emulation is provided by Xterm.js and the communication with the Kubernetes master API is proxied by the server.

To deploy the server in your Kubernetes cluster, run:

$ kubectl apply -f https://raw.github.com/astefanutti/kubebox/master/kubernetes.yamlTo shut down the server and clean-up resources, run:

$ kubectl delete namespace kubeboxFor the Ingress resource to work, the cluster must have an Ingress controller running. See Ingress controllers for more information.

Alternatively, to deploy the server in your OpenShift cluster, run:

$ oc new-app -f https://raw.github.com/astefanutti/kubebox/master/openshift.yamlYou can run Kubebox as an in-cluster client with kubectl, e.g.:

$ kubectl run kubebox -it --rm --env="TERM=xterm" --image=astefanutti/kubebox --restart=NeverIf RBAC is enabled, you’ll have to use the --serviceaccount option and reference a service account with sufficient permissions.

You can run Kubebox using Docker, e.g.:

$ docker run -it --rm astefanutti/kubeboxYou may want to mount your home directory so that Kubebox can rely on the ~/.kube/config file, e.g.:

$ docker run -it --rm -v ~/.kube/:/home/node/.kube/:ro astefanutti/kubeboxKubebox is available online at https://astefanutti.github.com/kubebox. Note that it requires this address to match the allowed origins for CORS by the API server. This can be achived with the Kubernetes API server CLI, e.g.:

$ kube-apiserver --cors-allowed-origins .*We try to support the various authentication strategies supported by kubectl, in order to provide seamless integration with your local setup. Here are the different authentication strategies we support, depending on how you’re using Kubebox:

| Executable | Docker | Online | |

|---|---|---|---|

OpenID Connect |

✔️ |

✔️ |

✔️[2] |

Amazon EKS |

✔️ |

||

Digital Ocean |

✔️ |

||

Google Kubernetes Engine |

✔️ |

If the mode you’re using isn’t supported, you can refresh the authentication token/certs manually and update your kubeconfig file accordingly.

Kubebox relies on cAdvisor to retrieve the resource usage metrics. Before version 0.8.0, Kubebox used to access the cAdvisor endpoints, that are embedded in the Kubelet. However, these endpoints are being deprecated, and will eventually be removed, as discussed in kubernetes#68522.

Starting version 0.8.0, Kubebox expects cAdvisor to be deployed as a DaemonSet. This can be achieved with:

$ kubectl apply -f https://raw.githubusercontent.com/astefanutti/kubebox/master/cadvisor.yamlIt’s recommended to use the provided cadvisor.yaml file, that’s tested to work with Kubebox.

However, the DaemonSet example, from the cAdvisor project, should also work just fine.

Note that the cAdvisor containers must run with a privileged security context, so that they can access the container runtime on each node.

You can change the default --storage_duration and --housekeeping_interval options, added to the cAdvisor container arguments declared in the cadvisor.yaml file, to adjust the duration of the storage moving window (default to 5m0s), and the sampling period (default to 10s) respectively.

You may also have to provide the path of your cluster container runtime socket, in case it’s not following the usual convention.

| Keybinding | Description |

|---|---|

General |

|

l, Ctrl+l |

Login |

n |

Change current namespace |

[Shift+]←, → |

Navigate screens |

Tab, Shift+Tab |

Change focus within the active screen |

↑, ↓ |

Navigate list / form / log |

PgUp, PgDn |

Move one page up / down |

Enter |

Select item / submit form |

Esc |

Close modal window / cancel form |

Ctrl+z |

Close current screen |

q, Ctrl+q |

Exit [3] |

Login |

|

←, → |

Navigate Kube configurations |

Pods |

|

Enter |

Select pod / cycle containers |

r |

Remote shell into container |

m |

Memory usage |

c |

CPU usage |

t |

Network usage |

f |

File system usage |

e |

Pod events |

Shift+e |

Namespace events |

Ctrl+e |

Cluster events |

-

Resources usage metrics are unavailable!

-

Starting version 0.8.0, Kubebox expects cAdvisor to be deployed as a DaemonSet. See the cAdvisor section for more details;

-

The metrics are retrieved from the REST API, of the cAdvisor pod running on the same node as the container for which the metrics are being requested. That REST API is accessed via the API server proxy, which requires proper RBAC permission, e.g.:

# Permission to list the cAdvisor pods (selected using the `spec.nodeName` field selector) $ kubectl auth can-i list pods -n cadvisor yes # Permission to proxy the selected cAdvisor pod, to call its REST API $ kubectl auth can-i get pod --subresource proxy -n cadvisor yes

-

$ git clone https://github.com/astefanutti/kubebox.git

$ cd kubebox

$ npm install

$ node index.js