-

Notifications

You must be signed in to change notification settings - Fork 0

Final plan

- Ashwani

- Attention visualisation

- Splitting the datasets in ONMT training

- Ben

- Write scripts to extract features from data e.g. sentence length, magnitude of numbers

- Filippo

- Running the model and gathering statistics on loss, BLEU, decomposed by difficulty, features

- Loss is cross-entropy - need to add to

metrics.pycalculations

- Loss is cross-entropy - need to add to

- Running the model and gathering statistics on loss, BLEU, decomposed by difficulty, features

Reference paper: Out-of-Distribution Generalization via Risk Extrapolation, anonymous authors, 2020

Our aim is to generalise to test data that is clearly different from, but a natural extrapolation of, the training data. There are particular features that need to be extrapolated, e.g. the magnitude of numbers or the length of formulas.

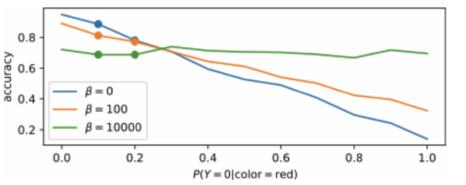

Now consider the plot below. It's from a specific example, but in general, consider the x axis to be "relevant feature that we want to generalise" such as number magnitude, and the y axis to be "performance".

The dots represent performance on different training environments. In our case, the different environments could be "addition problems with smaller numbers" and "addition problems with larger numbers". To clarify: this does not have to correspond to the different maths modules of our dataset. I think splits within each module makes more sense here.

Now, suppose that performance on both training environments is pretty good, but the larger-magnitude environment is worse: the blue data. What tends to happen is, for an extreme extrapolation (the right end of the plot), performance will be much worse. I hypothesise that this is basically what's happening on our extrapolation set.

We can address this by reducing the variance of performance between training environments. This corresponds to bringing the two points closer together in the plot (green and orange data). This leads to better performance on the extreme extrapolation, because we remove dependence on the feature (the x axis), flattening out the performance.

This way, we can get better test performance (green and orange, right-hand side) even though average training performance may worsen (green and orange, left-hand side). This is OK: when you reduce overfitting, training is worse but test is better. But there is a trade-off, as indicated by the beta hyperparameter.

Analyse the statistics of the dataset

-

This is similar to something we already discussed in the interim report, which would be good to do regardless:

From a very preliminary analysis on this task [arithmetic__add_or_sub_big], there appears to be some correlation between the complexity of the question (i.e. the number of digits that need to be added and the amount of text in the question) and the correctness of the result. In future we could test this systematically, measuring the variables over the whole dataset using a post-processing program.

-

For each module, there is a corresponding extrapolation set that increases some feature. The features can pretty much be summarised as "big", "longer", or "more". We need to measure these features precisely and split the training datasets by these features. Probably 2 or 3 partitions would be enough.

-

It's possible that the "easy", "medium" and "hard" difficulty splits are already suitable as the different environments. But we should verify this by measuring the relevant features for each difficulty. If the correlation is too low, we should use the method in the above point.

Check the variance in performance between the split datasets

- Before using the method, we first need to verify that the performance for each split (e.g. smaller numbers, larger numbers) actually gives a significant difference in performance. If there is little difference, there will be little variance to minimise in the first place.

Train on the regularised loss

- From the paper:

where

is the expected loss on environment e. Expected loss is approximated by the sample average of the final loss over the training points. That sounds fancy, but it's just the usual meaning of "loss" in deep learning. We are already optimising the first term, we just need to measure the variance between losses and set the hyperparameter beta.

-

According to the authors, the regularisation term should only be applied partway through training, so we need to choose when. This is discussed in section 4.2.1 of the paper. They suggest

Stability penalties should be applied around when traditional overfitting begins, to ensure that the model has learned predictive features, and that penalties still give meaningful training signals.

This still isn't too hard: we would need to measure when the model starts to overfit (perhaps this already happens for the baseline). Hopefully their heuristic works for us, but there is a risk that it does not. Even if it does not, we could try doing our own tuning, but this will be costly.

-

It requires modifications to OpenNMT: splitting training into the different datasets and then combining their results to get the loss. I have not yet investigated this. Given the simplicity of the method I am assuming it will be feasible. But we should investigate first.

Tokenisation/preprocessing

- English whole words

- Very straightforward, worth a try

- Polish notation

- Somewhat challenging to write a conversion script

- The conversion is context-sensitive

- Not all problems are applicable

- May need to handle different cases even for the problems that are applicable

Attention visualisation

- Try using existing visualisation code on our model

- Check many examples carefully to see if we can interpret the attention mechanism in a meaningful way

- If there is a meaningful interpretation: great, we can highlight it in the report, and maybe it will give insight to potential improvements.

- If there is no meaningful interpretation: we still report the results, but only a brief discussion.

- Ashwani seems well-positioned to work on this