Your Pharmacist Assistant

Pharmacists have a tough time finding the right location where medicine. Our speech to location platform aims to assist them in finding the right location quickly, easily and as user-friendly as possible.

We first created a front end for our users to interact with the interface. We wanted our interface to be as user friendly as possible so we created only two controls, a speech-dictator and a manual text input field.

The client application outputs the found medicine, and it's location, which is great.

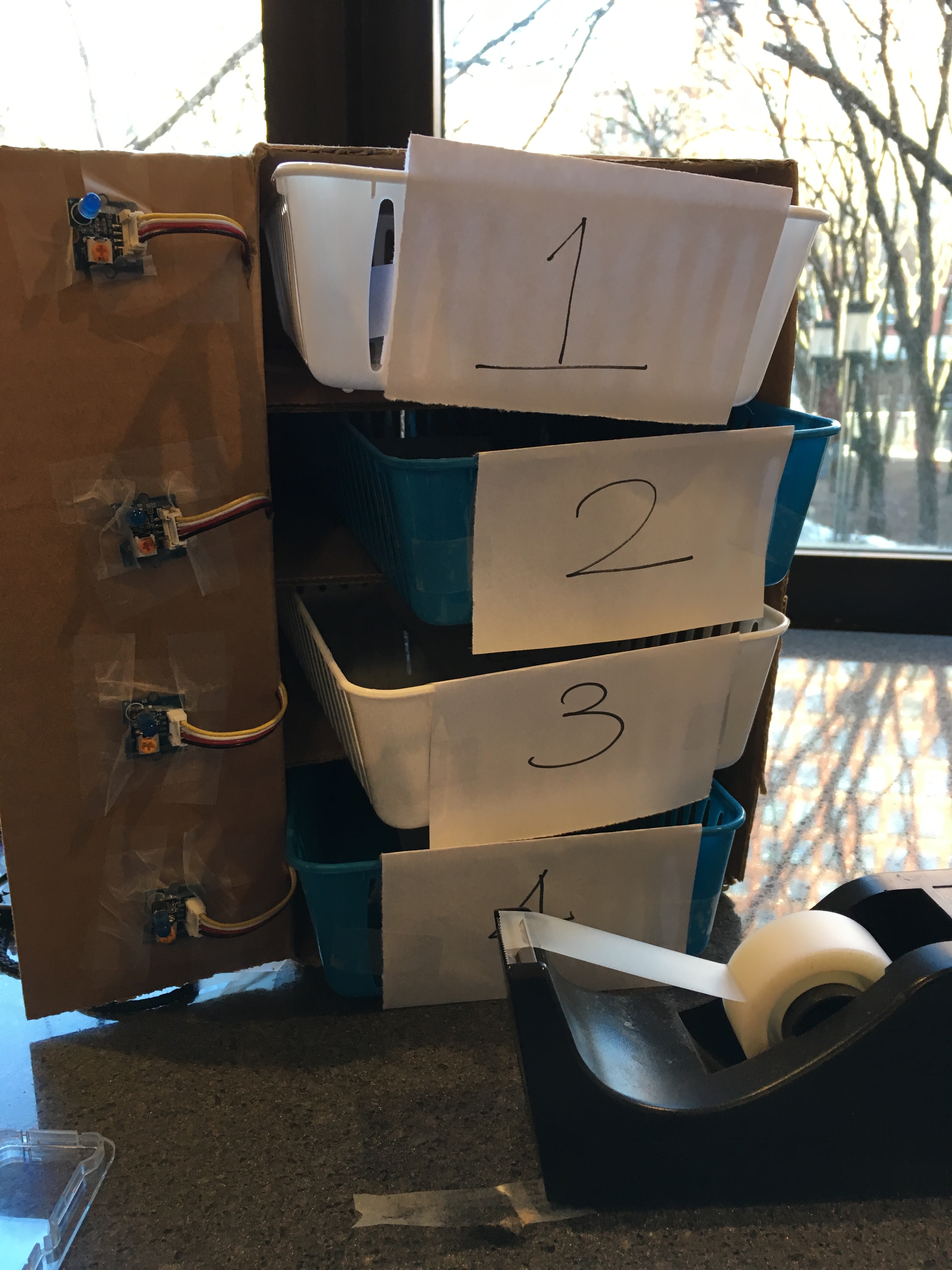

But, in order for pharmacists to actually find the medicine easily, we need to light the actual location up, which is where our hardware tech comes in.

We built a quick proof of concept prototype of a typical medicine cabinet (with trays) and added a json database to manage the medicine locations. When the voice commands match the medicines, the location automatically lights up and the pharmacist can easily find where the medicine is.

If that's not enough, we even threw in hey Google command triggers so you can begin telling the assistant what to do.

The following frameworks and technologies were used for this project:

arduino(101) as a simple location indicator, along with some LEDsNodeJS+expressserver to communicate with the arduino through the COM portReactJSon the front end for a user to get a nice user interfaceGoogle Cloud Platform's (Speech) API onnpmto create speech-to-text component for the project

This project is meant as a submission for HackNYU 2018 healthcare/assistive technology track.

- Load arduino_code onto your arduino and the necessary components to the appropriate ports. Visit arduino's website to figure out how.

- Make sure you have nodejs and npm installed.

- Install nodejs and run

npm install. Then, runnode index.jsfrom theapi-serverdirectory. - Run

npm installand then,npm startfrom theapp-clientdirectory to run the React front end application.