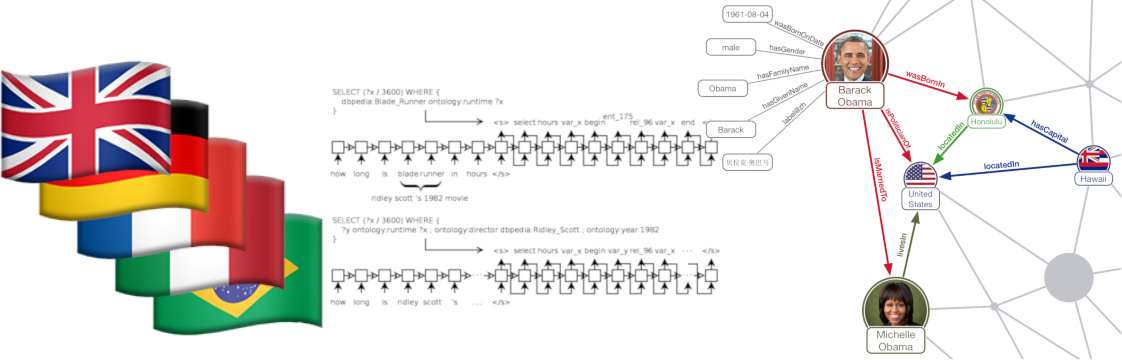

A Machine-Translation Approach for Question Answering over Knowledge Graphs.

If you are looking for the code for papers "SPARQL as a Foreign Language" and "Neural Machine Translation for Query Construction and Composition" please checkout tag v0.1.0-akaha or branch v1.

Coming soon!

Clone the repository.

pip install -r requirements.txtYou can extract pre-generated data and model checkpoints from here (1.1 GB) in folders having the respective names.

The template used in the paper can be found in a file such as Annotations_F30_art.csv. data/art_30 will be the ID of the working dataset used throughout the tutorial. To generate the training data, launch the following command.

mkdir -p data/art_30

python nspm/generator.py --templates data/templates/Annotations_F30_art.csv --output data/art_30Launch the command if you want to build dataset seprately else it will internally be called while training.

python nspm/data_gen.py --input data/art_30 --output data/art_30Now go back to the initial directory and launch learner.py to train the model.

python nspm/learner.py --input data/art_30 --output data/art_30This command will create a model checkpoints in data/art_30 and some pickle files in data/art_30/pickle_objects.

Predict the SPARQL query for a given question it will store the detailed output in output_query.

python nspm/interpreter.py --input data/art_30 --output data/art_30 --query "yuncken freeman has architected in how many cities?"or, if you want to use NSpM with airml to install pre-trained models, follow these steps,

- Install airML latest version from here

- Navigate to the table.kns here and check if your model is listed in that file.

- Then copy the name of that model and use it with the

interpreter.pyas follows

python interpreter.py --airml http://nspm.org/art --output data/art_30 --inputstr "yuncken freeman has architected in how many cities?"- Components of the Adam Medical platform partly developed by Jose A. Alvarado at Graphen (including a humanoid robot called Dr Adam), rely on NSpM technology.

- The Telegram NSpM chatbot offers an integration of NSpM with the Telegram messaging platform.

- The Google Summer of Code program has been supporting 6 students to work on NSpM-backed project "A neural question answering model for DBpedia" since 2018.

- A question answering system was implemented on top of NSpM by Muhammad Qasim.

@inproceedings{soru-marx-2017,

author = "Tommaso Soru and Edgard Marx and Diego Moussallem and Gustavo Publio and Andr\'e Valdestilhas and Diego Esteves and Ciro Baron Neto",

title = "{SPARQL} as a Foreign Language",

year = "2017",

journal = "13th International Conference on Semantic Systems (SEMANTiCS 2017) - Posters and Demos",

url = "https://arxiv.org/abs/1708.07624",

}

- NAMPI Website: https://uclnlp.github.io/nampi/

- arXiv: https://arxiv.org/abs/1806.10478

@inproceedings{soru-marx-nampi2018,

author = "Tommaso Soru and Edgard Marx and Andr\'e Valdestilhas and Diego Esteves and Diego Moussallem and Gustavo Publio",

title = "Neural Machine Translation for Query Construction and Composition",

year = "2018",

journal = "ICML Workshop on Neural Abstract Machines \& Program Induction (NAMPI v2)",

url = "https://arxiv.org/abs/1806.10478",

}

@inproceedings{panchbhai-2020,

author = "Anand Panchbhai and Tommaso Soru and Edgard Marx",

title = "Exploring Sequence-to-Sequence Models for {SPARQL} Pattern Composition",

year = "2020",

journal = "First Indo-American Knowledge Graph and Semantic Web Conference",

url = "https://arxiv.org/abs/2010.10900",

}

- Primary contacts: Tommaso Soru and Edgard Marx.

- Neural SPARQL Machines mailing list.

- Join the conversation on Gitter.

- Follow the project on ResearchGate.

- Follow Liber AI Research on Twitter.