the pipline version of lstm_ctc_ocr, use

- run

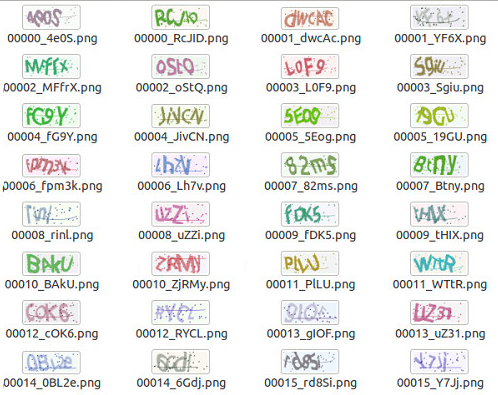

python ./lib/utils/genImg.pyto generate the train images intrain/, validation set invaland the file name shall has the format of00000001_name.png, the number of process is set to16. python ./lib/lstm/utils/tf_records.pyto generate tf_records file, which includes both images and labels(theimg_pathshall be changed to your image_path)./train.shfor training./test.shfor testing

Notice that,

the pipline version use warpCTC as default : please install the warpCTC tensorflow_binding first

if your machine does not support warpCTC, then use standard ctc version in the master branch

- standard CTC: use

tf.nn.ctc_lossto calculate the ctc loss

- python 3

- tensorflow 1.0.1

- captcha

- warpCTC tensorflow_binding

Notice that, sufficient amount of data is a must, otherwise, the network cannot converge.

parameters can be found in ./lstm.yml(higher priority) and lib/lstm/utils

some parameters need to be fined tune:

- learning rate

- decay step & decay rate

- image_width

- image_height

- optimizer?

in ./lib/lstm/utils/tf_records.py, I resize the images to the same size.

if you want to use your own data and use pipline to read data, the height of the image shall be the same.

update: Notice that, different optimizer may lead to different resuilt.

Read this blog for more details and this blog for how to

use tf.nn.ctc_loss or warpCTC