Replies: 37 comments 32 replies

-

|

Hi Klaus, I am deeply impressed with how you have reorganized the code and totally agree with you that 1000+ lines of code in a single file is cluttered and confusing. I'm in favor of anything that makes the current software more accessible and easier to modify. And the way commands, devices and GUI screens are managed seems much cleaner and clearer. The long term goal for this project is still to be able to tweak these things without making changes to the code. But I think your approach is already very good groundwork for that. With some examples for devices and commands and a few new pages of documentation, this will definitely be very helpful for new users. If that's OK with you, I would love to include parts (or all) of your branch in the software. What would be the best way to go about this? If anyone else has any suggestions on this topic, please feel free to bring them in. I still think @MatthewColvin's Abstraction branch is great. I just have the fear that the object-oriented approach is too complicated to modify for some hobby users. At least that's the case for me. |

Beta Was this translation helpful? Give feedback.

-

|

Hi Max, I'm glad you like it :-)

I tried to keep the code as simple as possible and to be readable as possible. I'm afraid, if there will ever be a solution without any need to manually change the code, only having a nice GUI where you can click together everything you need, the code itself would be so complicated, that only few people will be able to maintain it. No matter if object oriented or not. But we will see what will happen in the future ... I agree, as long as people have to modify the code, object oriented code can be overwhelming. BTW: Linus Thorvalds also does not like C++, but sometimes he is very strict in his opinions ... |

Beta Was this translation helpful? Give feedback.

-

|

You are probably right that a completely UI-based customization will be extremely complex to implement. Maybe it's enough to start with a set of common UI screens and devices. I have some older IR remotes that I can try to turn into device profiles, just to create a bigger set of examples. But I'm not so sure about other types of (Bluetooth) devices. The fire TV and Chromecast should be very popular. And surely the community can also contribute new devices. The documentation on this GitHub repo is definitely something that has to be expanded (Currently there are 4 whole lines of texts about the software). It would probably be best to split this into multiple pages about hardware, a basic code explanation, getting started, configuring a new device, etc. If you want to contribute a guide for your code and functions, that would be awesome. But of course you don't have to do this all by yourself. So far, everything about the new code that I have been able to test works. One small thing is disabling Bluetooth. If "ENABLE_BLUETOOTH" and "ENABLE_KEYBOARD_BLE" are no longer defined, the compilation fails. Maybe I'm doing something wrong, but it would be nice to be able to turn Bluetooth off when it's not needed, since that increases the boot time considerably. |

Beta Was this translation helpful? Give feedback.

-

FireTV is already fully supported through the BLE keyboard or the MQTT keyboard. The old Chromecast cannot be controlled in any way. You can only switch the HDMI input of your TV or AV receiver. I have an old Chromecast. If someone wants to pair both a fire TV and a new Chromecast to the OMOTE BLE keyboard, this could need some more work. Seems to be possible, but not yet directly supported

Sure. I reorganized everything into folders. Please have a look if you like it. Devices and corresponding GUI (if there even is one) are in the same folder. While doing this, I also created devices for AppleTV (Sony IR) and smarthome (MQTT).

I would love to do it for the software. We could use the GitHub wiki of the repo for this, like I did here

You did nothing wrong. As soon as a device is disabled or completely removed from code, it can happen that the commandHandler, the GUI or the keys are still using the commands from that device. This is what happened here. Normally, if you change something in the devices, you have to change the code of the different frontends (gui, keys, commandHandler) accordingly. But I understand, as it is likely that people want to deactivate WiFi, BLE or both (and there are already #defines for that), deactivating should work out of the box. I changed the code, so now you can simply deactivate it without further changes. We could also deactivate the BLE keyboard by default. |

Beta Was this translation helpful? Give feedback.

-

Oh, so the BLE keyboard can already control lots of different devices as long as they recognize a keyboard. That's perfect, I didn't know that it's this simple. It even shows up on my PC and iPhone and is recognized right away. Being able to connect to multiple devices would be even better, but you're right, we don't have to solve this immediately.

What I originally had in mind was a separate folder for devices and another for GUIs. But one folder for each device seems perfectly fine to me. That way each device has its commands and gui in one place. Thanks!

Bluetooth is probably a useful features for a lot of people, especially with the keyboard, so we can leave it enabled by default. In any case, I hope I will have time tomorrow to try and adapt the modular code so that I can use it to control my devices. Simply so that I can better understand how everything works. One thing is that I would like the hardware keyboard to change commands based on the current device and I am not sure what's the best way to achieve this. Does this require an extra keyCommands array for each device? The current software switches the hardware keyboard assignment based on the device that is selected on the screen. At least that is what I am used to with universal IR remotes, but maybe it is not so intuitive to have the button change their assignment like that. |

Beta Was this translation helpful? Give feedback.

-

Well, I think, first we should clarify some terms. A device, as the term is currently used in the software, can be different things:

In most cases you want to use scenes, e.g.

Each scene normally uses exactly one media player and some of the other devices. So I think what we need is a keypad layout for each scene, right? Scenes are a concept already available in the software. I would extend this so that each scene:

Switching the scene is currently done with one of the four lower buttons of the keypad. If someone needs more scenes, HOLD could also be used, so overall it is easily to support up to eight scenes. Maybe you have seen that the variable "currentDevice" is no longer available in the software. Some or even all screens may be independent in terms that they are not related to a scene at all

Only the "AppleTV" screen is depending on the scene, or to be more precise, it should only be available if the scene "AppleTV" is active. In the orginal software, all the screens were treated as devices (at least currentDevice was set), which does not really fit in several ways, I think. That's why I removed "currentDevice". I hope you like the idea of scenes. BTW this is exactly how Logitech Harmony is doing it. I think this concept is proven. I used a Harmony for years and am still using it. So my proposal is:

|

Beta Was this translation helpful? Give feedback.

-

|

As I have seen, you are using RC5 IR commands. I added this to the commandHandler. or for your Technisat like this and use the command in a screen like this For use in the keypad, you have to explicitely define commands for every key (as with APPLETV_KEYPAD_EVENT_1). If you need more support, you can send me a list of the commands in keyMapTechnisat and virtualKeyMapTechnisat and what they mean (e.g. names for the commands), then I can create a Technisat device for you. Same for Apple/Sony, With you simply don't know what is going on. |

Beta Was this translation helpful? Give feedback.

-

|

And I did some more movements of files and folders. Keys are now in folder "gui_general_and_keys".

Between the "devices_*"and "gui_general_and_keys" is the "commandHandler" as the glue between all. The commandHandler is located in the base directory. Hope this makes things clearer. |

Beta Was this translation helpful? Give feedback.

-

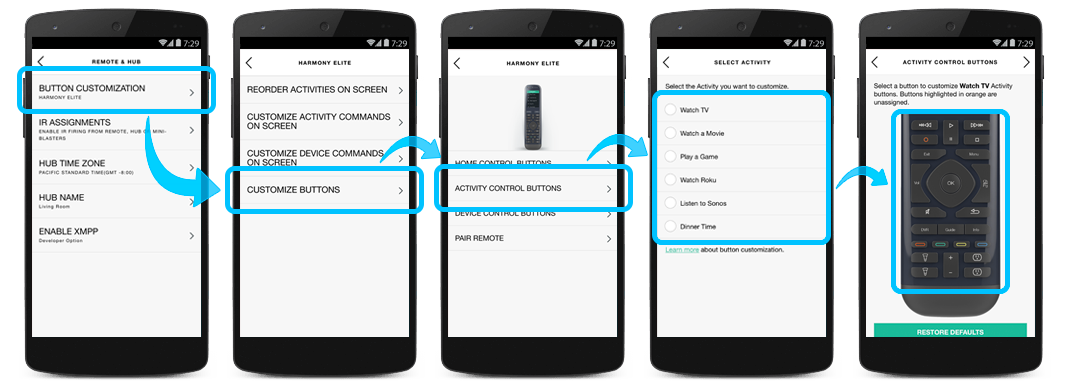

To be honest, I never actually owned a harmony remote, so thanks for clarifying how it works. From what I read in the manuals, I thought that the physical buttons change their mapping based on the device that it has to control. Logitech mentions activity control buttons, which makes sense since they can control multiple different devices with one layout. The term activity might be better than device for what I had in mind originally. I like how you implemented scenes and having sequences is probably very useful as well. For BLE and MQTT devices that should not be a problem but I am a little worried about IR devices. The harmony has a hub and even extra IR transmitters but the OMOTE has only its tiny IR LED. How can we make sure that the sequence is received by all the devices? |

Beta Was this translation helpful? Give feedback.

-

Right, Logitech calls it activity. But I think a more common term now (Logitech introduced this years ago) is scene, so I would use this term.

IR commands should be sent repeatedly. Of course this only works for commands like PowerOn, not for PowerToggle. I think we don't need this general approach in OMOTE, since for a scene you will simply programmatically define the sequence of commands and delay between them. This is even more detailed than what Harmony can do. |

Beta Was this translation helpful? Give feedback.

-

|

To have a more reliable IR connection, we could also create a small device, having only an ESP32 and an IR LED in it, nothing else. This small device could be placed next to the IR receiving devices (TV, amp, ...) The OMOTE sends MQTT messages to it, and the small device sends the IR signals. A good name for such a device could be "OMOTE IR satellite". Easy to do, one weekend. The satellite could also be used if the amp is in a cupboard without line of sight to the OMOTE. Harmony also had a small additional IR sender for this purpose. For me the IR performance of the OMOTE is good. Not as good as the Harmony Hub sitting next to the devices, but ok. I'm 3-4 meters away from the devices. If sometimes an IR signal is not recognized, I repeat it. Not a big thing. Also the start sequence for a scene can be repeated with an additional key hit. And this also happens sometimes with the Harmony Hub as well ... And I have seen here a comment about a hardware change for having a more powerful IR LED in the OMOTE. Let's see what future brings to us ... |

Beta Was this translation helpful? Give feedback.

-

Yes, that is something I find very useful. Especially if you have multiple IR devices with lots of buttons that cannot be mapped to a single keyboard layout. Would this mean that we need a keyRepeatModes, keyCommands_short and key_commands_long for each scene? Maybe these three can be combined into one keymap. At least then there is only one keymap per scene. Or how would you do this? Initially I was against the idea of a hub. The remote was supposed to be completely independent so that you don't need additional hubs and docks. But I can absolutely see the benefits of having some sort of IR repeater. Especially since it would be optional. The remote could check if it is connected to its satellite and decide if it should send the IR command itself or make the hub do it via MQTT. And it would be pretty easy to build one. I guess I will have to put that on my todo list :) |

Beta Was this translation helpful? Give feedback.

-

Maybe I would define a default map, and delta maps for each scene that overwrite only the keys you have in the delta map. I expect that the scenes share a lot of common keys. And as a user you could decide to simply put all the keys into the delta map, which would overwrite all keys from the default map. You could do it as you want. About combining: currently we have three maps. The layout of the code is so that you can understand or see where each key is. Not neccessary for the compiler of course, but at least for my eyes much easier to read. If you don't like three maps (or delta maps) for each scene, these three maps could be combined into a single map which would become a litte bit wider Do you like this more? To me this would be fine. |

Beta Was this translation helpful? Give feedback.

-

|

I think that depends on where the three maps are in the code. If they placed are in the device file, I don't mind. But the key file would get convoluted really quickly if there are three maps for each device. |

Beta Was this translation helpful? Give feedback.

-

|

They would not go into the key file, and not into the device file. |

Beta Was this translation helpful? Give feedback.

-

OK, this explains why it makes sense for you to put the scene switching on dedicated buttons.

Yes, exactly. This was meant to be similar to a LCD-Harmony remote like the Elite. The screen has two extra capacitive button to invoke an "activities" and a "devices" menu. After selecting a device, the remote switches to a Numpad or channel list which fits the device type. The only thing I wanted to do differently was to get rid of the selection menu to make the programming easier and the UI clearer. So it is just this: Now having more than one screen per device either means adding more screens to this side-scrolling menu or we need to another level in form of a menu or dedicated device switching buttons. In any case, it adds complexity. If there are scenes with start and end actions (like switching inputs) the simple menu does not work anymore because you would have to scroll through and unintentionally initialize devices that you do not want to use. So I think with scenes, an extra menu is probably unavoidable. How do you access this menu? Logitech's approach of having a dedicated button seems good. We could do the same with a UI button. Gestures like swiping from the bottom of the screen could work, too. But it is probably quicker to just press a button, even if that means losing screen real estate. The menu button could be the breadcrumb that is already on the bottom of the screen. It is not really needed since the sideways swiping does not change devices anymore. So it could become both the indicator for the current device/scene as well as access to the menu. Having two separate UI elements for the breadcrumb and the menu could be more intuitive. At least that is the way the YIO Remote 2 works (They have a physical home button below the screen). If that is the way to go, I would prefer the menu as in your first mockup and hide the settings etc in this menu as well. So the regular UI is just for remote control actions. |

Beta Was this translation helpful? Give feedback.

-

Exactly. I think we need a dedicated start screen for selecting the scene. Depending on the scene, we have different screens to the right. That seems to be the best way to do it. So for each scene you could

With that approach you can achieve all three of my mockups. It would be configurable which scenes you want to have and which screens you want to use in them. Scene indicator

Accessing the first page

For the beginning I would use a hardware key. Accessing dedicated device pages

To be honest, I never used the device pages in Harmony. I always only needed the most common commands on my remote. Is this concept ok for you? Then I would start on implementing this so that we can see if we are happy with that. BTW, this concepts means that we have to dynamically create the screens (or tabs in terms of lvgl). But this is needed anyway, because as I saw we are still strugglin with memory. I'm already working on this. The best way to save memory seems to dynamically create the tabs and to delete them as soon as they cannot been seen anymore. Moving bufA and bufB to the heap allowed me to have 100+ screens. But if BLE and WiFi are actived, it is much less, only 6-7. So there are still some tricks left we could do. I will start a dedicated discussion about this topic in the near future. |

Beta Was this translation helpful? Give feedback.

-

|

Scene indicator Yes, I have seen where you put it and I think that is alright. I feel a little sorry for my old page indicator, but that will not fit with this approach. Accessing the first page The key layout is not really made to have a dedicated menu button like e.g. the YIO Remote. It would have to be recognizable as a home button of some sort. I would prefer a touchscreen based command like swiping up from the bottom of the screen. I can try to implement his for a mockup but I am sure it can be done with LVGL. I imagine it could look and work something like this: So you have a menu layer with scenes, devices, settings and so on. And when you enter a scene or device, you can have multiple pages. In both the menu and the devices layer you can switch pages by side scrolling. To get back to the menu you could pull up from the bottom of the screen (the page indicator could also indicate that there is a swipe up gesture similar to iOS). A menu layer would also allow the hardware keys to be used for controlling the UI. Buttons can be easily configured as keyboard inputs in LVGL. So you only have to touch the screen once to enter the menu and then use the arrow keys for everything else. However we do it, it will be a big change from the way the remote worked with my old code. I am still not completely sure if we should add this extra complexity. Personally I would not need scenes at all but former Logitech users like you might miss them. Then again, the previous version of the YIO remote only had a one-layered UI, too: https://yio-remote.github.io/documentation/ |

Beta Was this translation helpful? Give feedback.

-

Yes, great, looks good. And if someone wants to define a hardware key as home, this can be done very easily. Just put the according command in the keypad map. An example on how to swipe up would be helpful for me. The simulator does not yet really work for me. Maybe @MatthewColvin can help me in the other discussion about the abstraction branch. Something else that could really help me is this thread:

Great idea.

Yeah, this would be a big change. To be honest, my last published version is also already fine for me. I think scenes are very helpful so that you can define different keyboard layouts binded to them. This is exactly what I needed. For me it is fine for having only two or three screens, and they can already be context sensitive to the scenes they are in. I'm more used to the keys, so the current state would be totally fine for me. But others might be much more screen focused, and I think for them the screen concept shown by you would be very helpful. I would love to spend some time on this and to implement it. But of course, someone should see a benefit in it. Is anyone else reading this? |

Beta Was this translation helpful? Give feedback.

-

|

@KlausMu The crash on my remote can be narrowed down to this line in sceneRegistry.cpp: |

Beta Was this translation helpful? Give feedback.

-

I just tried out the LVGL gesture event, which would be a good start for what we want to achieve. You can set it up with this:

Inside the event you can check to make sure it was a swipe up gesture (LV_DIR_TOP) This would allow for a basic gesture detection. If we want a smooth transition, it gets more complicated. |

Beta Was this translation helpful? Give feedback.

-

|

Dear @KlausMu , I really like the changes you did to clean up the code base. My understanding is, that the behavior you describe you image for the remote is very close to my understanding as well. However, in the current code base, all devices are actually hard coded and I think this is fine for people who feel comfortable changing and re-compiling the software. However, I think it would be better to be able to make this configurable without re-compiling the software. I think this is possible I already started to document a possible approach. I have the first rough draft written here: https://github.com/bittnert/OMOTE/blob/modular-approach/doc/Omote%20remote%20overview.md To configure the device I would image (in the long run) a web interface which is stored on the remote control and which can be loaded. In a first step however, I would change the current approach you have on your branch. Currently there are devices like "yamahaAmp" which seems to be an amplifier from Yamaha which uses the NEC IR Protocol. I would change this so the firmware does not know what a Yamaha AMP is and does not know how to command it (has no idea what the power on command is for example). Instead, the firmware know what the NEC IR Protocol is and how to talk to a device using this protocol. The configuration structure would then contain the information to know how to command a specific device. I think as a first proof on concept, it should be possible to define such a config structure and then fill it within the C code. The change to know would only be, that such a config structure needs to be filled to define how to communicate with a device, the firmware would still need to be re-compiled to change this. But in the future, a configuration file in Flash could be used which can be uploaded over wifi (e.g. from a web interface) so the firmware does not need to be re-compiled. I pulled your branch into my repo and will try to make the changes as a proof of concept so you can see what I mean exactly and we can discuss further. BR |

Beta Was this translation helpful? Give feedback.

-

|

A lot of interesting discussions to catch up on, and lots of topics covered. I think the branch is in a very good place currently, and would be worth submitting as a PR soon to gather more in-depth feedback to the code. My PCB arrived 2 days ago, and since then I've been tinkering with Klaus's branch as I'm only missing the case & buttons. For reference, I am a programmer with a mid-level experience in C/C++ and zero prior experience with the ESP32 platform or Platformio itself. Even then, I was able to jump in without a lot of hassle. Without reading any of the above discussion before tinkering:

My thoughts summarised: perfect is the enemy of done. The branch as-is makes it much easier to get started tinkering. It gives users (developers) a much better grasp of how to start writing new screens, device integrations, and scenes than the current repository. It's a very good step in the right direction. There's SO MUCH more to improve, but we don't need to do it all in one go! Trust me: merge what's in the branch now, and you'll get much more activity with people wanting to add their own integrations based on existing examples. Now, in terms of topics discussed:

#define BTN_PWR 'o'

#define BTN_STOP '='

std::map<char, std::string> keyCommands_short {

{BTN_PWR, ALLDEVICES_POWER_TOGGLE}

};

In terms of open points from my perspective, I only had one real question @KlausMu - is switching scenes the only way to run a batch of commands? For example, I'd want one of the physical buttons to

Currently this doesn't seem to be possible? Again, generally I will stick to one scene to lower UX complexity. Awesome work so far though! Thanks to all the hard work of everyone involved! |

Beta Was this translation helpful? Give feedback.

-

I agree with @WizardCM 😄. The modular code is a better starting point for people new to this project and not everything has to be implemented and polished from the start. @KlausMu If that's OK for you I would love to merge your modular code and make this the default branch. Then we can continue from there. |

Beta Was this translation helpful? Give feedback.

-

|

Sure, I think it is already good enough.

I will create a PR on Monday.

There are already some topics on my todo list, and I would like them to be published soon, in that order

- [x] documentation on how to understand, to use and to modify the code. Is there anything special you want me to explain? I will write a page than can be published in the github wiki

- [x] memory optimization of lvgl. This is indeed a major task, and e.g. for the sliding event I still need a solution. But it is important, because with wifi and ble enabled you will quickly get memory issues

- [ ] simulator for creating pages in windows (and Linux?)

- [ ] scene selector page as start page

- [ ] available gui pages based on the currently active scene. Hide pages not needed in a scene

- [ ] make gui actions context sensitive for the currently active scene

|

Beta Was this translation helpful? Give feedback.

-

|

I now created a documentation for the new main branch. Please have a look here. If you have any feedback, please let me know. |

Beta Was this translation helpful? Give feedback.

-

|

Hi all, I pushed a new version of my branch. This new version implements the memory optimization described here This was really a big change in the software, and also a complex one. To me this was the most important missing feature. Otherwise, we would constantly have had memory issues. I also created a small memory status text at top of the screen which can be activated or deactivated at compile time, showing both ESP heap and lvgl memory. The numbers turn red in case of resources running out. Now only three tabs stay in memory at the same time. As long as these three tabs do not become too big, everything is fine. With that you can have 100 tabs and more without any problem. Swipe through the tabs and watch the memory status. It is very interesting to see how lvgl memory usage is going up and down and what happens if you use WiFi. Some tricks were necessary so that you cannot recognize that the whole content of the screen is recreated after each swipe. I think the result is very good. Please have a look. It would be very helpful if you could test it out. And please also read the new section in the Wiki. I reduced the default memory for lvgl from 48k to 32k, because now this seems to be enough. I think with 48k you might get trouble when using WiFi. @WizardCM maybe this is what you encountered. I also introduced a Please see this description if 32k does not fit to your needs.

Unfortunately you need to change your GUI files, if you already have created some. You have to

|

Beta Was this translation helpful? Give feedback.

-

|

Hi all, or, if you already have cloned the repository: Note: New feature versions will no longer be published in my repository, but directly here in a feature branch. The main new feature is, as the title of the branch suggests, the simulator directly running on Windows or Linux:

With the simulator, you can run the GUI code directly on Windows or Linux and don't have to flash the firmware to the ESP32 each time you do an iteration while developing the LVGL gui. This can dramatically reduce the time needed for adjusting the software to your needs. The simulator uses exactly the same GUI code that is used for compiling the ESP32 firmware. So when you are finished with your GUI code, simply compile the code for ESP32 and flash it. For a comprehensive documentation on how to setup the simulator, please read the wiki Software simulator for fast creating and testing of LVGL GUIs Memory usage was again optimized and another 25k were saved. Thanks @MatthewColvin for your valuable hints. There have been a lot of other changes in the code, especially the folder structure has significantly changed. Code is now divided into

Wiki documentaton How to understand and modify the software has been updated as well. My advise is to not try a merge of the new version, but to start with a fresh installation. If you already changed the code, then

So if you are curious about the new feature branch, feel free to test it. Feedback is very welcome. After about one week, the feature branch will be brought into main branch. |

Beta Was this translation helpful? Give feedback.

-

|

Sending MQTT messages is now supported in the simulator, both in Windows and Linux/WSL2. An example on how to subscribe to MQTT messages and how to propagate them to the GUI is also included. And it is shown how to send an update request to your home automation software as soon as OMOTE has WiFi connected. With that, your home automation software can send the states of the smart home devices known to OMOTE. @WizardCM I think MQTT subscription is something you wanted to use. Everything is still in feature branch "lvgl-simulator" https://github.com/CoretechR/OMOTE/tree/lvgl-simulator |

Beta Was this translation helpful? Give feedback.

-

|

A new feature branch called "scene-selection" is available for test. or, if you already have cloned the repository: Feautures in this branch:

You can deactivate the "scene selection gui" and scene specific gui lists if you want to keep the old behaviour (only one single list of guis, available in all of the scenes). Please see the updated Wiki: This time, there are not so many code changes. I also created a PR if you want to comment: |

Beta Was this translation helpful? Give feedback.

-

Dear all,

I would like to start a discussion on how a good starting point of the software could look like for people willing to use OMOTE.

I was at the same point some weeks ago when I started using OMOTE. With the current software (with software I mean the file "main.cpp") I had some issues

So I started to break up the existing code into several files. While doing this, I understood what everything is good for.

It's much easier to understand a software if you have separate files for every major thing.

Finally I ended up with a new version including the following features:

From my perspective, this approach would be a much better starting point for people willing to use and to configure their OMOTE to their needs.

Don't get me wrong, people would still need to modify the source code. My approach is still far away from what e.g. Logitech Harmony is providing with their Windows App or Android App where you can configure everything with a nice user interface. To achieve this A LOT MORE effort had to be put into the software.

Here are some hints on how to use and to understand the code, if you are interested:

How to add a device:

Create two files similar to e.g.

and call

init_<yourDevicename>_commands()inmain.cppHow to add a gui (a screen):

Example for numpad:

and call

init_gui_numpad()andinit_gui_pageIndicator_numpad()in the main gui filegui.cppHow to add a completely new type of commands:

Currently the commands "IR global cache", "IR NEC", "IR SAMSUNG", "MQTT", "BLE Keyboard" and "Special" (e.g. for Scenes) are supported.

If you need more type of commands, add them in

commandHandler.hThe actual sending of a command using the different technologies like infrared, MQTT and so on is done in

commandHandler.cppAlso the handling of scenes is done there.

How to define how the keypad works

This is done in

keys.cppYou can define for every key how it should behave in case of a long press. Three modes are supported:

For example I am using:

Then you can define for every key which command should be send for a short press, e.g.

and for a long press:

If you need to, you can also change the repeat rate:

If you are interested, please try the following branch:

https://github.com/KlausMu/OMOTE/tree/modular-approach

Looking forward to your feedback.

Beta Was this translation helpful? Give feedback.

All reactions